Master the top AI skills for high-paying jobs in 2025—explore ML, NLP, generative AI, MLOps, and more to launch a successful and future-proof tech career. The AI job market is booming. Employers are rapidly seeking talent who can build and deploy intelligent systems.

For example, LinkedIn’s data shows AI-related job listings jumped nearly 74% year-over-year, and Coursera reports generative AI as the fastest-growing skill with an 866% surge in demand. Top tech firms (OpenAI, Netflix, Google, etc.) are offering hefty salaries for AI roles. To thrive in this landscape, focus on five key skills: Machine Learning, Natural Language Processing (NLP), Generative AI, Computer Vision, and MLOps. Below, we define each skill, explain its importance for 2025, highlight current/future use cases, list relevant tools, and suggest companies and job titles where the skill is in demand. We also point to ways you can start learning each skill.

| Role | Average Salary (USD, 2025) |

| NLP Engineer | $157,240 |

| Machine Learning Engineer | $164,048 |

| Computer Vision Engineer | $132,077 |

| AI Product Manager | $182,587 |

| AI Architect | $148,441 |

Table: Sample average salaries for top AI-related roles in 2025.

Machine Learning (ML)

Machine learning (ML) is the core skill underpinning most AI work. In ML, algorithms learn patterns from data instead of relying on hard-coded rules. As one source puts it, ML is about teaching computers “to learn from data”. In practice, this means using data (images, text, numbers, etc.) to train models that can make predictions or decisions. For example, an ML model can be trained on years of financial data to predict stock trends, or on medical images to spot disease.

Why is ML so important for 2025? Quite simply, most AI applications rely on ML. Every virtual assistant, recommendation engine, or autonomous system uses ML under the hood. The World Economic Forum even lists “machine learning engineer” among the top three fastest-growing jobs. Companies need ML experts to analyze data and build smart systems. In fact, at leading tech firms like Google, Amazon, and Meta, experienced ML engineers command base salaries of $170,000–$200,000 (often much more with bonuses).

Current and future use cases: ML is everywhere. Examples include:

- Predictive analytics: forecasting trends in finance, sales or weather.

- Recommendation systems: powering suggestions on Netflix, Spotify, and e-commerce sites.

- Personalization: adapting content (news feeds, ads) to user preferences.

- Autonomous systems: enabling self-driving cars to interpret sensor data and make navigation decisions.

- Healthcare AI: using patient data to predict disease or tailor treatments.

In the future, ML will only expand – think advanced robots that learn by experience, AI-driven manufacturing lines that self-optimize, or personal AI tutors that adapt to each student.

Key tools and platforms: Python is the standard language, along with ML libraries like scikit-learn, TensorFlow, and PyTorch. You’ll also use data tools such as Pandas and NumPy, and cloud ML services (AWS SageMaker, Google AI Platform, Azure ML). ML engineers often rely on visualization (Matplotlib, Seaborn) and experiment-tracking tools (e.g. MLflow or Weights & Biases) to manage models. Learning platforms: consider courses like Andrew Ng’s Machine Learning on Coursera, or the Machine Learning Engineer Nanodegree on Udacity. Kaggle competitions are also great practice.

Jobs and companies: Common titles include Machine Learning Engineer, Data Scientist, and AI Engineer. These roles exist across industries. Top tech employers (Google, Amazon/AWS, Microsoft, Facebook/Meta, Apple) hire ML engineers for search, voice assistants, recommendation algorithms, and more. For example, Google’s Search and Ads teams use ML heavily, and Amazon’s Alexa team needs ML for voice understanding. Financial and healthcare companies (JP Morgan, Pfizer, etc.) also recruit ML experts. Entry paths often include software engineering or data science backgrounds.

Getting started: Build a strong math/statistics foundation (linear algebra, probability). Learn Python and practice with small projects (e.g. classification or regression tasks). Follow an introductory ML course (Coursera, Udacity). Apply what you learn by working on sample datasets (e.g. housing prices, image classification). Use ML libraries to build and train models. As you progress, tackle end-to-end projects: scrape data, train a model, and deploy a simple app. This hands-on experience will solidify ML concepts.

Natural Language Processing (NLP)

Natural Language Processing (NLP) is the AI field focused on letting computers understand and generate human language. In NLP, machines process text or speech to perform tasks. For instance, every time you use voice search or chat with a bot, NLP is at work. A recent guide explains that NLP “enables computers to comprehend and respond to text and speech, facilitating smooth and meaningful human-machine interactions”. In other words, NLP teaches machines to read, interpret, and produce language in ways we humans do naturally.

NLP is crucial for 2025 because language is a huge part of technology. Chatbots, translators, digital assistants, and even AI writing tools all rely on NLP. The rapid rise of large language models (like OpenAI’s GPT-4 and Google’s models) has accelerated NLP demand. Industry data predicts the global NLP market will hit $43 billion by 2025, thanks to tools like ChatGPT. This drives high salaries for skilled NLP practitioners: the average pay is often in the $150K+ range for NLP engineers.

Current and future use cases:

- Chatbots and virtual assistants: Siri, Alexa, and Google Assistant use NLP to parse voice commands and provide answers. (E.g. Amazon’s Alexa uses advanced NLP to lock doors or answer questions.)

- Machine translation: Services like Google Translate rely on NLP models to convert between languages.

- Search engines: Understanding user queries and ranking results involves NLP.

- Sentiment analysis: Companies scan social media or reviews to gauge public sentiment on products or events.

- Text generation and summarization: Generative NLP (LLMs) can write articles, summaries, or even code.

- Healthcare and law: NLP helps extract insights from clinical notes or legal documents.

Future uses include even smarter conversational agents (AI counselors or tutors), automated content creation for marketing, and real-time translation earphones. As language models improve, virtually every industry will find NLP applications (e.g. auto-generating video game dialogue, analyzing financial reports, etc.).

Key tools and frameworks: Python libraries like spaCy, NLTK, and Gensim handle basic NLP (tokenization, parsing, vectors). Most cutting-edge work uses deep learning frameworks: Hugging Face Transformers is hugely popular for deploying models like BERT, GPT, T5, etc. Other tools include TensorFlow/Keras and PyTorch for building custom models, and libraries like AllenNLP. Don’t forget domain-specific tools: Stanford’s CoreNLP or Facebook’s Fairseq. Many cloud platforms offer NLP APIs (Google Cloud Natural Language, AWS Comprehend, Azure Cognitive Services). For experimentation, Jupyter notebooks are commonly used.

Jobs and companies: Typical titles include NLP Engineer, Machine Learning Engineer (NLP), or Data Scientist (NLP). Many big tech companies have NLP teams. For example, Google (search, Translate), Amazon (Alexa), Apple (Siri), Microsoft (Azure AI), and Meta (content filtering) all hire NLP experts. OpenAI, Google Brain, and Amazon AI Research actively publish NLP models and hire researchers. Even content startups (Grammarly, Duolingo, transcription services) need NLP talent. With specialized NLP skills, you could see job listings at these firms with salaries often well over $150K.

How to begin: Start with linguistics basics (parts of speech, semantics) and Python. Learn text processing (tokenizing, lemmatization) with NLTK or spaCy. Take an NLP course or specialization (Coursera and Udacity have good ones). Practice by building projects like a chatbot, spam detector, or sentiment analyzer. Explore Hugging Face’s tutorials to fine-tune a transformer model on a text dataset. Contribute to NLP competitions on Kaggle or Hugging Face. As you gain experience, delve into advanced topics (attention mechanisms, sequence-to-sequence models) and experiment with open-source models (BERT, GPT) to see how they work in real applications.

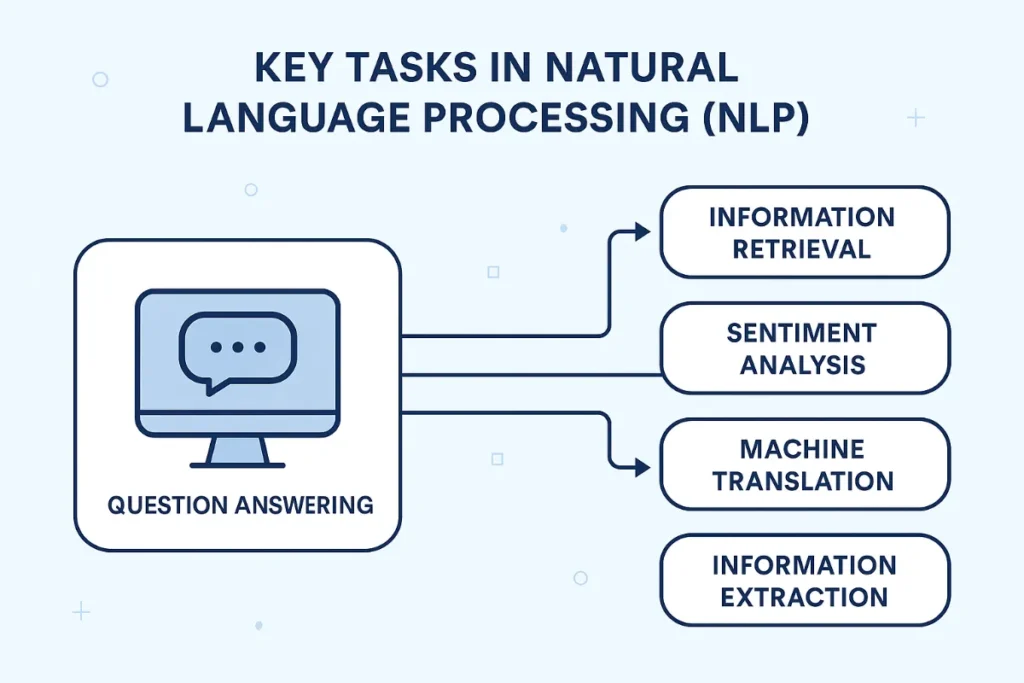

Figure: Key tasks in Natural Language Processing (NLP) include information retrieval, sentiment analysis, machine translation, information extraction, and question answering. NLP applications help computers process and generate human language in systems like Alexa, Google Search, chatbots, and translation services.

Generative AI

Generative AI refers to AI systems that can create new content. This includes models that generate text (like GPT-4), images (like DALL·E or Stable Diffusion), audio, video, or even code. In essence, generative AI combines “creative” generation with AI algorithms. A useful way to think of it is as “Generative + AI” – using artificial intelligence to generate content【56†】. As one industry article notes, generative AI tools such as GPT and DALL·E are “revolutionizing industries such as entertainment, advertising, and content creation”. In 2025, generative AI is one of the hottest tech skills. Coursera’s report already identifies “GenAI” as the fastest-growing skill (866% YoY increase).

Why it matters: Generative AI is transforming how work gets done. It automates creative tasks (writing, designing graphics, coding snippets) that used to require human effort. Because of this, companies are urgently looking for people who understand generative models. Job postings for roles like “AI Prompt Engineer” or “Generative AI Engineer” are on the rise. Salaries are high: OpenAI (a leading GenAI firm) advertises research scientist roles in the $295K–$440K range. Moreover, sectors from healthcare (drug discovery) to finance (report generation) are piloting generative AI solutions, meaning demand spans industries.

Current and future use cases:

- Text generation: Automatic article writing, summarization, and conversational bots. (E.g. ChatGPT-based tools that draft emails or answer customer queries.)

- Image and video synthesis: Creating marketing graphics, designing game assets, or even generating full short films. Companies like Samsung are using generative AI for robots to interact naturally (Samsung’s “Ballie” robot uses conversational GenAI).

- Code generation: Tools like GitHub Copilot write code from comments. This is already speeding up software development.

- Scientific R&D: For example, the biotech startup Cradle uses Google Cloud’s generative AI to design proteins for drug discovery, drastically accelerating research.

- Customer service: Best Buy is launching a generative AI virtual assistant to troubleshoot customer issues and schedule appointments, reducing the load on human agents.

- Personalization: AI that creates custom music playlists, virtual fashion try-ons, or tailored lesson plans.

Looking ahead, generative AI will become integrated into almost every digital product. Expect AI co-pilots in business apps (legal analysis, research summarization), advanced AI artists creating digital art on demand, and more sophisticated AI tutors or entertainment companions.

Figure: Illustration of Generative AI: using artificial intelligence to generate content (text, images, audio, video, code, etc.). Generative AI tools like GPT and DALL·E are redefining creative workflows. This skill includes techniques like training large language models (LLMs) and designing prompts to guide AI generation.

Key tools and platforms: Familiarity with large models and AI APIs is essential. Common tools include OpenAI’s models (GPT-4, DALL·E) and platforms like Hugging Face (which hosts many generative models and provides libraries). For images, tools like Stable Diffusion, Midjourney, and Runway ML are popular. Programming frameworks (TensorFlow, PyTorch) are used to build or fine-tune generative models. Many companies also offer generative AI services on the cloud (Google Vertex AI, Azure OpenAI Service, AWS Bedrock). Importantly, prompt engineering has become a skill in its own right – learning how to craft effective inputs (prompts) to get desired outputs from AI. (As one source notes, prompt engineering is a top AI skill for 2025.)

Jobs and companies: Roles often titled AI Researcher, Generative AI Engineer, or AI Scientist (LLM) are emerging. Startups and big tech alike hire these skills. For example, OpenAI, Google Brain/DeepMind, and Microsoft Research are at the forefront of generative AI, while Adobe and Canva integrate AI into creative products. Gaming and entertainment companies (Unity, Netflix) explore AI-generated content. Even fast-food chains partner on AI: McDonald’s is collaborating with Google to use generative AI in marketing and operations. On LinkedIn and job boards, expect listings for Prompt Engineer, AI Developer (Generative AI), and related titles at companies investing in AI innovation.

Getting started: Begin by learning how to use existing generative models. For text, try the OpenAI API or Hugging Face’s GPT models; for images, experiment with Stable Diffusion or DALL·E in online interfaces. Study courses on generative AI (Udemy and Coursera offer “Generative AI with LLMs” classes). Learn the underlying techniques: neural network architectures (Transformers for text, GANs/diffusion models for images). Build simple projects, like a text completion app or an AI-based art generator. Practice prompt engineering by iterating prompts to refine AI outputs. Finally, dive into more advanced topics, such as fine-tuning a language model on custom data or building a small GAN, to understand how these systems are trained.

Computer Vision

Computer Vision (CV) is the AI field that enables computers to see and understand images and video. IBM defines it as “a field of artificial intelligence (AI) that uses machine learning and neural networks to teach computers and systems to derive meaningful information from digital images, videos, and other visual inputs”. In other words, CV trains machines to interpret the visual world, much like human vision, but with cameras and algorithms.

Why is CV in demand? So many products now rely on visual data. Self-driving cars use computer vision to detect pedestrians and road signs. Smartphones use it for face recognition. Retailers use it to monitorthe checkout and inventory. Medical imaging (X-rays, MRIs) employs CV to spot anomalies. In 2025 and beyond, all of these applications will keep growing. Analysts forecast the computer vision market to hit $386 billion by 2031, up from $126B in 2022. Companies across tech and industry need CV experts to bring these systems to life.

Use cases:

- Autonomous vehicles: Tesla, Waymo, and others use CV to recognize cars, bikes, pedestrians, and lanes. Computer vision engineers often focus on object detection and scene understanding for this reason.

- Surveillance and security: CV powers facial recognition (e.g. unlocking phones) and anomaly detection in security cameras.

- Robotics and drones: Robots use vision to navigate, pick objects, or interact with environments. Drones use CV for mapping and inspection.

- Healthcare imaging: Analyzing medical images (e.g. detecting tumors in scans) is a growing CV field.

- AR/VR and entertainment: Augmented reality apps (Snapchat filters, virtual try-on) use CV to overlay graphics on live video.

- Industrial inspection: Manufacturing plants use CV to inspect products for defects at high speed.

Industries relying on CV include autonomous vehicles, robotics, augmented/virtual reality, and healthcare. For example, computer vision “is being used in applications like facial recognition, autonomous vehicles, and medical imaging”. The demand is reflected in salaries: the average CV engineer’s pay is around $168,000 per year, with top roles paying above $200K.

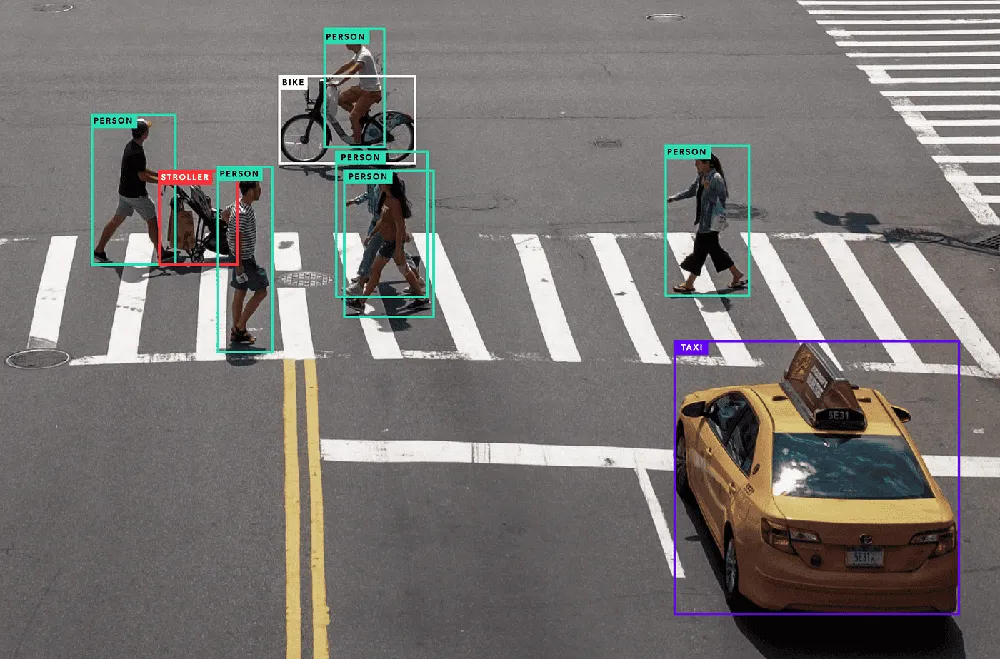

Figure: Example of computer vision in action. An object-detection model has labeled people, a bike, and a taxi in a street scene (bounding boxes). Tasks like this (detecting and classifying objects in images) are central to computer vision. Technologies like this are used in self-driving cars, surveillance cameras, and robotics.

Tools and frameworks: Familiar CV tools include OpenCV, a popular library for image processing. Modern CV relies heavily on deep learning frameworks: TensorFlow/Keras and PyTorch have built-in capabilities for vision (CNNs, ResNets, etc.). Specialized CV libraries like YOLO and Detectron2 implement state-of-the-art detection models. For higher-level tasks, platforms like TensorFlow Lite and MediaPipe (by Google) facilitate CV on mobile or edge devices. Cloud services (Google Vision API, AWS Rekognition, Azure Computer Vision) let you apply pre-built CV models via API. Skills to learn here include convolutional neural networks (CNNs), object detection/segmentation techniques, and libraries like OpenCV.

Jobs and companies: Common titles include Computer Vision Engineer, Vision Scientist, or Robotics Perception Engineer. Big tech companies (Tesla, Nvidia, Google, Apple) actively recruit CV specialists. For instance, Tesla and Waymo need CV engineers for self-driving, Apple for FaceID and ARKit, and Meta for VR/AR. Other players include robotics firms (Boston Dynamics), surveillance companies, and healthcare AI startups. The highest-paying roles often require Ph.D.-level expertise; for example, average CV engineer salaries are over $168K. Even companies outside tech use CV – automakers, airlines, and retailers have vision projects.

Getting started: Build on machine learning foundations (especially deep learning). Start with OpenCV tutorials (learn to process images, detect edges, etc.). Then take a computer vision course (many exist on Coursera/Udemy). Work through projects like image classification on MNIST/CIFAR datasets. Learn to use pre-trained CNNs (e.g. ResNet) for classification with PyTorch or TensorFlow. Next, tackle object detection (e.g. train a YOLO model). Practice by building simple vision apps: a face detector, a gesture recognizer, or an autonomous-driving simulation. Online challenges (Kaggle’s image competitions) are great practice. Over time, familiarize yourself with techniques like image segmentation and video analysis, and master a CV framework (TensorFlow Object Detection API or PyTorch ecosystem).

MLOps (Machine Learning Operations)

MLOps is the practice of deploying and maintaining machine learning models in production. It bridges the gap between ML development and real-world deployment. In essence, MLOps applies software engineering principles (DevOps) to machine learning. As one source describes, MLOps “combines machine learning and DevOps to streamline [deployment of] AI solutions at scale”. In 2025, this skill is critical because building a model is only half the battle—getting it to reliably serve users is equally challenging.

Demand for MLOps engineers is skyrocketing. LinkedIn’s Emerging Jobs report noted a 9.8× growth over five years in MLOps roles. Companies now understand that without robust MLOps practices, even a great AI model can fail to make an impact. Typical responsibilities include automating data pipelines, setting up training pipelines (CI/CD), containerizing models (e.g. with Docker), and monitoring model performance in production. This is vital for any application that continually learns from new data or integrates AI into products.

Use cases:

- CI/CD for ML: Automating the retraining and deployment of models as new data arrives. For example, a news recommendation model might retrain daily and redeploy automatically.

- Containerized deployment: Packaging models into Docker containers and orchestrating them with Kubernetes or serverless infrastructure, so services can scale.

- Model monitoring: Tracking metrics (accuracy, data drift, latency) in production and alerting if performance degrades.

- Versioning: Keeping track of model versions and data sets so you can roll back if needed.

- Data and feature engineering pipelines: Ensuring data flows smoothly from raw sources into model training. Tools like Apache Airflow or Kubeflow Pipelines are often used.

Every sector with AI products needs MLOps. For example, Netflix continuously updates its recommendation models for user behavior changes. In finance, fraud-detection models are retrained weekly as patterns evolve. E-commerce companies use MLOps to update personalization engines overnight. Without MLOps, these AI systems would not stay accurate or scalable.

Key tools and frameworks: Learn DevOps fundamentals (Git, Docker, Kubernetes). Specific to MLOps, tools include TensorFlow Extended (TFX) for building pipelines, MLflow and Kubeflow for experiment tracking and serving models, and SageMaker Pipelines (AWS) or Azure ML pipelines (Microsoft) for end-to-end workflows. Familiarize yourself with cloud platforms: AWS SageMaker, Google AI Platform, and Azure Machine Learning have built-in MLOps features (model registry, monitoring, etc.). Infrastructure-as-code tools (Terraform) and CI/CD services (Jenkins, GitHub Actions) are also important. Essentially, MLOps engineers pick the right tools to take an ML project from Jupyter notebook to a robust service.

Jobs and companies: Titles include MLOps Engineer, AI Infrastructure Engineer, or Machine Learning Platform Engineer. Major tech firms have dedicated MLOps teams: Netflix (Netflix’s own MLOps stack), Uber (Michelangelo platform), Google (Vertex AI), and AWS hire MLOps specialists. Cloud providers themselves are big employers (AWS, GCP, Azure) since they build these services. Finance, retail, and manufacturing companies with heavy AI usage also hire MLOps talent. Given the niche skill, salaries are high; for instance, senior MLOps roles are often in the six-figure range, and one source noted compensation for ML/MLOps roles jumped about 20% year-over-year.

Learning path: You’ll need software development experience. Start by containerizing a simple ML model (e.g. wrap a trained model in a Flask API inside a Docker image). Learn Kubernetes basics to deploy containers. Then take an MLOps specialization or workshop (Coursera and Udacity offer MLOps tracks). Practice setting up CI/CD: host your code on GitHub and configure automated tests/deployments (GitHub Actions or Jenkins). Play with MLflow or Kubeflow to log models and metrics. Finally, implement a small end-to-end project: for example, automate the full cycle of training a model on new data and deploying it. Real-world internships or labs in a data engineering team are also valuable to see MLOps workflows in action.

Conclusion

In summary, Machine Learning, NLP, Generative AI, Computer Vision, and MLOps stand out as the top AI skills for high-paying roles in 2025. These skills are critical in today’s projects and are backed by surging demand: for example, AI job salaries routinely exceed $150K–$200K. Learning these skills will open doors to roles at Google, Amazon, Microsoft, Tesla, and dozens of other innovators. If you’re a student or career-switcher, start by building foundations (math and programming), then use online courses and hands-on projects to gain each skill. As you specialize, contribute to GitHub projects, participate in hackathons, or publish a small portfolio project. Continuous learning is key — AI is evolving fast, so staying current (through blogs, research papers, or communities) will keep your skills in demand.

By mastering these top AI skills, you’ll position yourself for some of the most sought-after and lucrative tech roles of the future.

Sources: Authoritative industry and career reports from Coursera, LinkedIn, Forbes, Glassdoor, and others provide the data and insights on trends, salaries, and skill definitions used above. Each skill’s tools and use cases are corroborated by leading tech training resources and real-world company examples (as cited).

FAQs related to “Top 5 AI Skills for High-Paying Jobs in 2025”:

1. What are the top AI skills that can lead to high-paying jobs in 2025?

The top AI skills include Machine Learning, Natural Language Processing (NLP), Generative AI, MLOps, and Computer Vision. These are in high demand across industries and offer lucrative career opportunities.

2. How can I start learning AI skills with no prior tech background?

You can begin with free or beginner-friendly platforms like Coursera, edX, or Udacity. Start with Python programming, then move to AI fundamentals and gradually advance to specialized fields like ML and NLP.

3. Are AI certifications worth it for landing high-paying jobs?

Yes, AI certifications from recognized platforms (Google, IBM, Stanford, DeepLearning.AI, etc.) can boost your credibility, demonstrate your expertise, and help you stand out to employers.

4. What industries are hiring professionals with AI skills in 2025?

Industries such as healthcare, finance, e-commerce, cybersecurity, and autonomous vehicles are actively hiring AI professionals, particularly those skilled in machine learning and generative AI.

5. Do I need a degree to get a job in AI?

While a degree in computer science or a related field helps, many employers now value skills and project experience more. Strong portfolios, real-world projects, and certifications can substitute for a traditional degree.