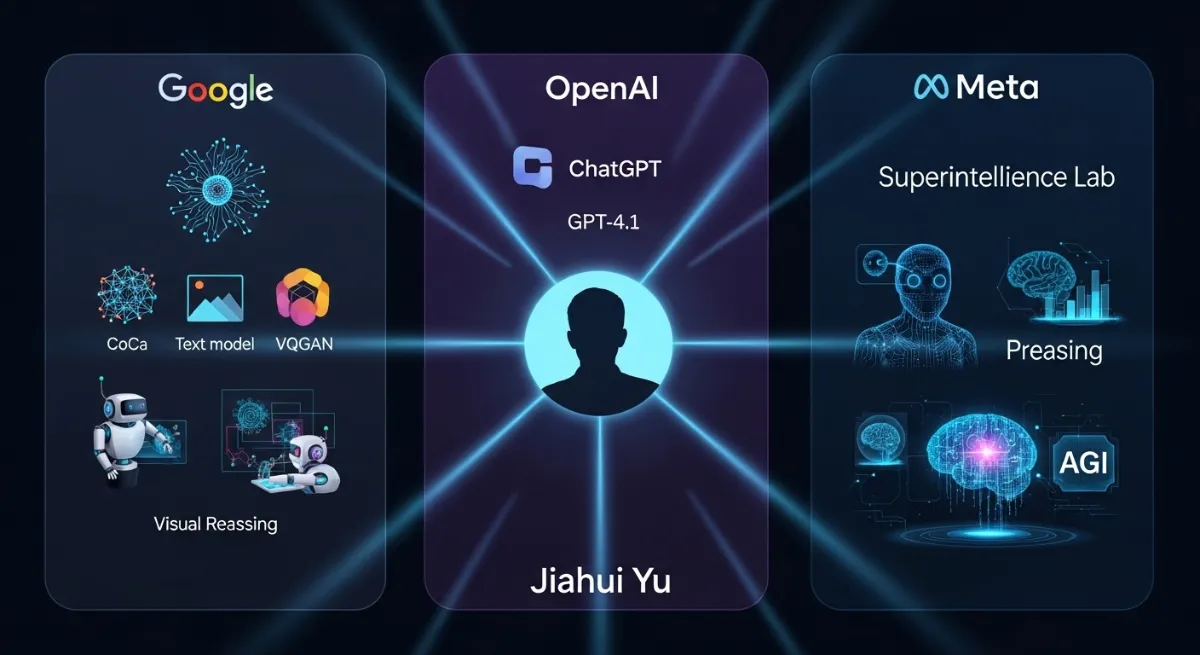

Jiahui Yu is a rising star in AI research whose career has spanned major labs at Google (including DeepMind), OpenAI, and now Meta. His work – from image-text foundation models to advanced multimodal LLMs – has directly influenced tools and models that AI practitioners use today. For example, as someone who’s spent two years building AI-powered projects (like the open-source “nocosttools”), you’ll appreciate how Yu’s contributions (to vision-language models and chain-of-thought reasoning) underpin many of the advanced features in modern AI services. Below we trace Yu’s career progression by year, highlight the key projects he led at each company, and explain their broader impact on the AI field.

Early Career at Google/DeepMind (–2023)

Yu began his career as a researcher at Google (later Google DeepMind), where he co-authored numerous foundational papers and blogs on vision and multimodal learning. For instance, in 2022 he co-led CoCa (Contrastive Captioners), a unified image-text model developed on Google Research’s Brain team.

The Google AI blog (May 2022) explains how CoCa learns aligned image and text embeddings, advancing vision-language pretraining. In the same year he co-authored a Google blog on an improved VQGAN for image generation – work that pushed the state of generative image models by training “vector-quantized” latent representations more effectively. These projects (among others at Google) helped set new standards in how models combine vision and language.

- CoCa & VQGAN (2022): Yu co-authored Google’s blog posts on CoCa (a novel image-text encoder-decoder) and on an enhanced VQGAN for high-quality image generation. These innovations improved how models understand and generate visual content.

- PaLM 2 (2023): Yu was a core contributor to Google’s PaLM 2 large language model. Google’s I/O 2023 blog highlighted PaLM 2’s “improved multilingual, reasoning and coding capabilities”. In other words, this model could handle over 100 languages and complex reasoning tasks, setting new benchmarks on many NLP tasks (as the PaLM 2 technical report confirms).

- Gemini Multimodal (2023): Just before leaving Google, Yu co-led Gemini’s vision team at DeepMind. Gemini is a family of multimodal models that jointly process images, audio, video and text. As Wired reported, Yu was a co-leader of Google DeepMind’s “multimodal at Gemini” effort. (The Gemini report [December 2023] shows him as a co-author on a model that “exhibit[s] remarkable capabilities across image, audio, video, and text understanding”.)

Together, these Google projects pushed the boundaries of AI: better image synthesis (VQGAN), richer image-text understanding (CoCa), and powerful multimodal reasoning (PaLM 2 and Gemini). These advances ultimately led to the development of many downstream tools. For example, improvements in vision-language models have since powered features like Google’s Bard and NotebookLM to handle images and code more adeptly.

Transition to OpenAI (2023)

In late 2023 Yu left Google/DeepMind to join OpenAI. At OpenAI he became Research Lead of the Perception team (focusing on vision and multimodal AI) and co-created some of their most advanced models. According to a profile in Wired, OpenAI’s CEO Mark Zuckerberg noted Yu’s role as “cocreator of o3, o4-mini, GPT-4.1 and GPT-4o” and former leader of OpenAI’s perception group. In other words, Yu helped build models that can “think” about images and handle multi-modal inputs in ChatGPT.

- OpenAI o3 and o4-mini (2025): In April 2025 OpenAI released o3 and o4-mini, described as “the smartest models we’ve released to date.” These models are designed to think with images: they can crop, zoom, and rotate input images as part of their chain-of-thought reasoning. OpenAI’s blog explains that o3 and o4-mini extend prior models by natively incorporating image manipulation into their reasoning steps. This lets the model solve complex visual tasks (e.g. analyzing a photo, reading difficult text on a sign) without separate vision modules. Such advances in visual reasoning directly benefit developers: tools like ChatGPT gained the ability to interpret and transform user images in real time.

- GPT-4o (2024) and GPT-4.1 (2025): Yu also worked on OpenAI’s next-gen GPT series. The GPT-4o model (launched mid-2024) is an “omni model” that accepts any combination of text, image, audio and video inputs and produces any combination of outputs. As the OpenAI system card describes, GPT-4o is trained end-to-end across text, vision, and audio, so all modalities are handled by the same network. This unified model can even generate and respond to speech in conversations. Building on that, in April 2025 OpenAI introduced GPT-4.1, a family of models that significantly improve on GPT-4o. The GPT-4.1 models (including “mini” and “nano” variants) show major gains in coding and instruction-following, and support huge context windows (up to 1 million tokens). For example, GPT-4.1 sets a new state-of-the-art on benchmarks for long-context understanding, improving by ~7% over GPT-4o. OpenAI’s blog notes that GPT-4.1 is the new leader in coding tasks (54.6% on the SWE-bench, a 21.4% absolute jump vs GPT-4o). These models push the frontier for what AI assistants can do (faster code-writing, better reasoning over long documents), and Yu’s leadership on these projects helped deliver those capabilities into the API and ChatGPT platform.

In sum, at OpenAI Yu drove the engineering of multimodal reasoning and next-generation LLMs. Users today benefit from these advances: for instance, ChatGPT’s vision-capable models (like the o3 series) can now autonomously analyze complex images, thanks in part to Yu’s work. Similarly, the improvements in GPT-4.1 (context length and coding skills) mean that two-year AI developers like you have access to much more powerful AI tools than even a year ago.

Joining Meta’s Superintelligence Lab (2025)

In mid-2025 Yu made his latest move: he left OpenAI to join Meta. This was part of a big recruiting push by Meta’s CEO Mark Zuckerberg. Reports in June 2025 confirmed that Yu (along with several other top OpenAI researchers) was hired into Meta’s new Superintelligence Labs. Reuters explains that Meta reorganized its AI efforts into this new division in June 2025, headed by Alexandr Wang (ex-Scale AI CEO) with Nat Friedman as co-lead. This lab is charged with a high-stakes mission: to accelerate progress toward advanced general intelligence (AGI). Although details are still emerging, Yu’s track record suggests he’ll be working on cutting-edge multimodal AI at Meta as well.

This transition has significance beyond Yu’s resume: it signals that Meta is doubling down on the very research areas he specializes in. By joining Meta’s AGI-focused lab, Yu will help shape the next wave of Meta’s AI products – from improved generative models to new AI-driven applications in social platforms, messaging, or even hardware (like AR glasses). While it’s too early to list specific models at Meta (none have been announced publicly yet), the move itself made headlines. For example, Wired and TechCrunch noted that Yu “joined Meta’s superintelligence team” along with three colleagues. As Meta’s internal memo put it, Yu was credited as a “co-leader of multimodal at Gemini” and an architect of OpenAI’s latest models – credentials he will now bring to Meta’s projects.

Broader Impact on AI and the Developer Community

Across these transitions, Yu’s career reflects and reinforces major trends in AI. At Google, his work helped bridge vision and language (CoCa, PaLM2, Gemini). At OpenAI, he advanced the integration of vision, audio, and long-context reasoning into foundation models (GPT-4o, o3/o4-mini, GPT-4.1). These technical contributions have “trickle-down” effects on the tools and libraries you use. For example:

- The vision-language techniques from CoCa and Gemini paved the way for today’s image-aware chatbots (like ChatGPT with image input) and advanced image generation pipelines.

- The multimodal GPT innovations mean that models can now answer questions about uploaded photos, transcribe and analyze speech, and incorporate visual data into reasoning – features you can leverage via the OpenAI API or tools built on it.

- The efficiency gains in models like GPT-4.1 (performing better at lower cost) enable services like “nocosttools” to exist: developers can integrate these models into apps more affordably, and push them on affordable hardware.

For a user with two years of AI experience (and projects like nocosttools), this matters a lot. Yu’s projects have contributed to models and APIs that you may have already used (e.g. GPT-4o or o3 in ChatGPT). His career shows how cutting-edge research at big labs eventually becomes part of mainstream AI toolkits. In practical terms, Yu’s emphasis on multimodal reasoning and efficient models suggests future tools will be even more capable. Imagine updating your projects with a model that can both read text and understand images seamlessly, or that can use an extended context window (thanks to GPT-4.1’s million-token capacity) – those advances are rooted in Yu’s work.

Conclusion: Jiahui Yu’s AI career journey – from Google/DeepMind through OpenAI and now to Meta – has been tightly focused on building the next generation of intelligent models. Year by year, he has led teams that expanded the AI field’s horizons: enabling deep understanding of images, videos and speech in models, and scaling up LLMs in capability and efficiency. His story illustrates a path of continuous innovation: each company switch came with concrete new architectures (Gemini, o3, GPT-4.1, etc.) that move the field forward. For AI practitioners like you, Yu’s impact is reflected in the stronger models and tools now at your fingertips. As he takes on Meta’s ambitious agenda, we can expect more breakthroughs – perhaps ones that you’ll soon use in your projects.

Sources: This analysis draws on Google AI blog posts and technical papers, OpenAI research and product releases, and news reports from Wired, Reuters, and TechCrunch detailing Yu’s roles and projects.

Who is Jiahui Yu and why is his career journey in AI significant?

Jiahui Yu is a prominent AI researcher known for his impactful contributions at Google, OpenAI, and Meta. His career journey in AI is significant because he has co-created groundbreaking models like CoCa, Gemini, GPT-4o, and GPT-4.1, which have advanced multimodal understanding and AI capabilities across the tech industry.

What were the major highlights of Jiahui Yu’s career at Google?

During his time at Google and DeepMind, Jiahui Yu worked on several notable projects, including CoCa (a vision-language model), improved VQGAN for image generation, and was a co-leader of the Gemini multimodal team. These milestones mark a strong beginning in Jiahui Yu’s career journey in AI.

How did Jiahui Yu contribute to OpenAI’s GPT-4o and GPT-4.1 models?

As the Research Lead of OpenAI’s Perception team, Jiahui Yu played a key role in the development of GPT-4o and GPT-4.1, which introduced advanced multimodal reasoning and extended context understanding. These contributions mark a major leap in Jiahui Yu’s career journey in AI.

Why did Jiahui Yu move from OpenAI to Meta in 2025?

In 2025, Jiahui Yu joined Meta’s newly formed Superintelligence Lab, aligning with Meta’s push toward AGI (Artificial General Intelligence). This move reflects a strategic step forward in Jiahui Yu’s career journey in AI, taking on new challenges in scaling superintelligent systems.

How has Jiahui Yu’s work influenced developers and AI projects?

Developers worldwide, including indie creators and startup founders, benefit from Yu’s work in models like GPT-4.1 and CoCa, which power tools for vision analysis, coding, and multimodal interaction. His career journey in AI has directly impacted how accessible and advanced these AI tools have become for the global tech community.