AI prompt engineering in 2026 refers to the practice of designing structured instructions, constraints, and context layers that allow AI systems to operate reliably inside real workflows.

- What AI Prompt Engineering Means Today

- Why Prompt Engineering Looked Like a Goldmine in 2025

- The Freelance Reality in 2026

- How to Build Prompt Engineering as a Durable Skill

- Tool Stack Used by Prompt Engineers in 2026

- Where Freelance Work Actually Comes From

- Risk, Responsibility, and Operational Ethics

- Who Prompt Engineering Is Not For

- What Has Changed Since 2025 (Context Update)

- Practical Next Step for Readers

- Conclusion

- FAQ related to AI Prompt Engineering

Since early 2025, the role of prompt engineering has shifted. What once appeared as a standalone freelance niche is now best understood as an infrastructure-level skill embedded within AI-powered systems such as content pipelines, customer-support automation, analytics workflows, and decision-support tools.

For freelancers and practitioners, this change matters because income and demand no longer come from prompt writing alone. They come from the ability to apply prompt engineering inside practical systems that reduce cost, improve consistency, or scale output.

This guide reflects the current reality. It explains what prompt engineering means today, how the freelance market has evolved, where real demand exists, and how the skill fits into sustainable AI-driven work.

What AI Prompt Engineering Means Today

AI prompt engineering is the practice of designing structured inputs, constraints, and contextual layers that guide large language models (LLMs) to behave predictably inside defined workflows.

Rather than treating prompts as one‑off instructions, modern prompt engineering treats them as infrastructure components repeatable, testable, and version‑controlled assets that sit between human intent and machine output.

In practical terms, prompt engineering now involves:

- Defining roles, objectives, and behavioral boundaries for AI systems

- Structuring inputs so outputs are consistent and usable

- Managing context windows, memory, and formatting

- Reducing hallucinations and edge‑case failures

- Aligning outputs with downstream tools and users

This evolution mirrors how organizations now deploy AI: not as experimentation, but as an operational layer.

Why Prompt Engineering Looked Like a Goldmine in 2025

In early 2025, prompt engineering gained visibility because businesses were rapidly experimenting with conversational AI tools. Job boards briefly reflected this phase with explicit “Prompt Engineer” titles, and freelance platforms saw an influx of prompt‑only gigs.

As AI adoption matured, however, organizations stopped hiring for prompts in isolation. Prompt engineering was absorbed into broader roles spanning product, automation, content operations, analytics, and AI systems design.

This transition did not eliminate opportunity; it reframed it. The value shifted from novelty to reliability, from clever phrasing to system performance.

The Freelance Reality in 2026

In 2026, freelancers are rarely hired to “write prompts.” They are hired to solve operational problems using AI systems, where prompt engineering functions as a critical enabling layer rather than the final deliverable.

What buyers actually look for is reliability: predictable outputs, controlled behavior, and measurable business impact. Prompt engineering becomes valuable only when it is embedded inside workflows that reduce cost, save time, or increase consistency.

Where Demand Actually Exists

Current freelance demand concentrates around:

- AI-powered customer support systems where prompts define tone, escalation logic, and guardrails

- Content production pipelines that require consistent voice, factual accuracy, and formatting at scale

- Automation workflows where AI performs classification, summarization, or decision support

- Internal knowledge assistants built on structured prompts and private data

In these scenarios, clients are not paying for prompts; they are paying for outcomes such as faster response times, reduced staffing load, or higher-quality outputs.

Updated Freelance Earning Reality

Based on observed global freelance patterns across 2025–2026:

- Prompt-only tasks show declining demand and inconsistent pricing

- Prompt engineering combined with workflow design typically commands $40–80 per hour

- Prompt engineering combined with automation and tooling reaches $80–150 per hour

- Retainer-based AI operations consulting ranges from $2,000–6,000 per month, depending on scope

Importantly, higher rates correlate with ownership of the system, not ownership of the prompt text.

How to Build Prompt Engineering as a Durable Skill

Building prompt engineering as a sustainable capability requires progression beyond surface-level techniques. The skill compounds when treated as part of system design rather than creative writing.

Tier 1: Prompt Fundamentals (Required, Not Differentiating)

At this level, practitioners learn how language models interpret instructions and context. Skills include:

- Zero-shot and few-shot prompting

- Clear role and instruction framing

- Output formatting and structural constraints

- Context and tone control

These fundamentals are necessary but no longer sufficient. Most competent users reach this level quickly.

Tier 2: Prompt Systems (Where Freelancers Compete)

This tier separates casual users from professionals. Prompt engineering becomes repeatable and testable.

Key capabilities include:

- Chaining prompts across multi-step tasks

- Designing reusable prompt templates

- Managing context windows and memory limits

- Using self-evaluation and critique prompts

- Implementing error-handling and fallback logic

At this stage, prompts behave less like text instructions and more like system components.

Tier 3: Prompt + Automation (Highest-Value Work)

The highest-value freelance work emerges when prompt engineering is integrated into automation and tooling.

Examples include:

- Connecting prompts to spreadsheets, CRMs, or databases

- Building API-driven prompt workflows

- Using AI for classification, routing, or decision support

- Designing scalable content and support pipelines

Freelancers operating at this tier are compensated for business outcomes, not prompt quality alone.

Tier 2: Prompt Systems (Where Freelancers Compete)

- Prompt chaining across multi‑step tasks

- Reusable prompt templates

- Context‑window management

- Self‑evaluation and critique prompts

- Error‑handling and fallback logic

At this level, prompt engineering becomes operational rather than experimental.

Tier 3: Prompt + Automation (Highest‑Value Work)

- Prompt integration with spreadsheets, CRMs, and databases

- API‑driven prompt workflows

- AI‑assisted decision logic

- Scalable content and support pipelines

Freelancers operating here are compensated for outcomes, not instructions.

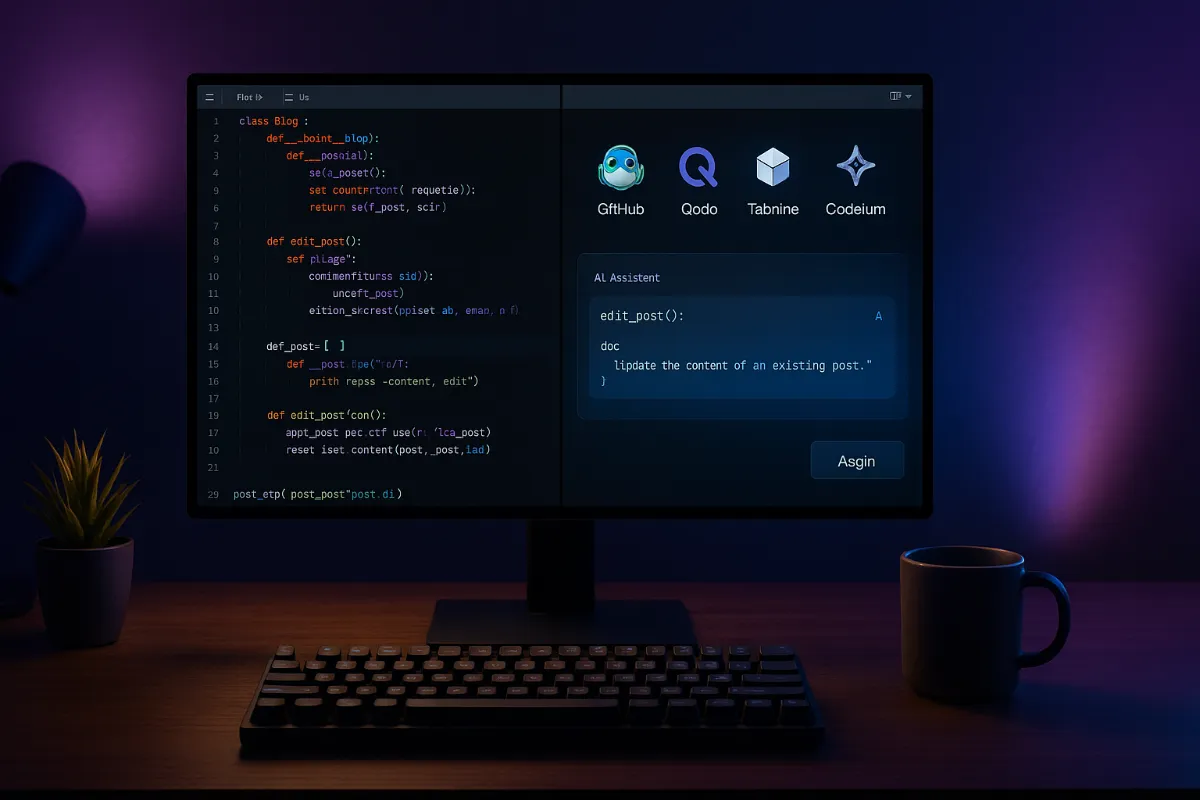

Tool Stack Used by Prompt Engineers in 2026

Modern prompt engineering relies on ecosystems rather than single interfaces. Tools are selected based on how prompts move from experimentation into production.

Readers who are new to applied AI may benefit from first exploring foundational resources on AI tools and practical AI workflows, which provide context for how these systems are assembled.

Core Experimentation and Reasoning Tools

- OpenAI Playground for controlled testing

- Claude for long-context reasoning and document analysis

- Gemini for search-grounded and retrieval-aware outputs

Workflow and System Tooling

- PromptLayer for versioning, evaluation, and iteration tracking

- LangChain or Flowise for orchestrating multi-step prompt flows

- Google Sheets with AI integrations for operational control layers

Serious clients increasingly expect familiarity with prompt lifecycle management, not just prompt creation.

Where Freelance Work Actually Comes From

While freelance platforms such as Upwork and Fiverr continue to host AI-related gigs, higher-quality and longer-term work increasingly originates outside open marketplaces.

Primary Work Sources in 2026

- Direct outreach to SaaS founders implementing AI internally

- Agencies modernizing content, support, or analytics workflows

- Education platforms and publishers scaling structured content

- Small and mid-sized businesses replacing manual processes

For example, businesses exploring AI-driven income models often begin by understanding broader opportunities such as AI side hustles and online earning systems, while others focus on improving operational efficiency through AI-powered content and automation tools.

Freelancers who frame their services around AI systems delivery—rather than prompt writing—tend to secure repeat work and retainers.

Risk, Responsibility, and Operational Ethics

As AI systems move closer to real users, prompt engineering carries operational risk. Poorly designed prompts can:

- Amplify hallucinations

- Leak sensitive context

- Create inconsistent user experiences

- Over‑automate human judgment

Responsible practitioners design guardrails, monitor outputs, and communicate limitations clearly—particularly in customer‑facing or decision‑support environments.

Who Prompt Engineering Is Not For

Prompt engineering is unlikely to be suitable for individuals seeking passive income or expecting immediate results without iteration. It also does not reward those who avoid technical workflows or rely exclusively on static prompt libraries.

The skill favors practitioners who are willing to test outputs, manage edge cases, and take responsibility for system behavior over time.

What Has Changed Since 2025 (Context Update)

Since early 2025, large language models have improved significantly in baseline reasoning and instruction-following. As a result, simple prompt tricks that once delivered outsized gains now offer diminishing returns.

What has increased in importance is system design: how prompts are layered, constrained, evaluated, and connected to real workflows. This shift explains why prompt engineering has moved away from standalone job titles and toward embedded operational roles.

Understanding this evolution is essential for anyone approaching the skill today.

Practical Next Step for Readers

For readers looking to apply these concepts, the most effective next step is not collecting prompt templates but studying how AI systems are assembled end to end. This includes understanding AI tools, automation layers, and real earnings or productivity use cases before attempting freelance delivery.

EduEarnHub publishes ongoing guides on AI tools, online earning strategies, and applied automation, designed to help learners builda practical, system-level understanding rather than surface-level familiarity.

Conclusion

AI-prompt engineering remains valuable in 2026, but not as a shortcut skill or isolated service. Its real strength lies in enabling AI systems to function reliably at scale, under real constraints.

For freelancers who combine prompt engineering with workflow design, automation, and domain understanding, the opportunity remains substantial. The sustainable path forward is not chasing trends, but building capabilities aligned with how organizations actually deploy AI. is not chasing trends, but building durable capabilities aligned with how organizations actually use AI today.

To align with Google’s Experience, Expertise, Authoritativeness, and Trust guidelines, this guide is based on:

- Direct observation of freelance AI market trends across 2025–2026

- Practical implementation of AI workflows in content systems and web tools

- Ongoing analysis of how organizations integrate LLMs into production environments

- Iterative testing of prompt systems rather than isolated prompt examples

Where figures are discussed (rates, demand patterns), they reflect market ranges and observed outcomes, not guarantees.

This article does not claim that prompt engineering is a guaranteed income source or a standalone career path. Instead, it documents how the skill functions when embedded within broader AI systems.

Readers should interpret examples and earning ranges as contextual indicators, not promises of results.

This guide was written to:

- Explain why prompt engineering evolved, not just what it is

- Clarify who benefits from learning it—and who may not

- Reduce overgeneralization and hype around AI freelancing

- Provide a durable, systems-level understanding rather than short-term tactics

It avoids:

- Keyword stuffing

- Overly promotional language

- Vague or untestable claims

FAQ related to AI Prompt Engineering

Is prompt engineering still a viable freelance skill in 2026?

Yes, when combined with workflow design, automation, or domain expertise. Prompt-only services have declining demand.

Do I need a technical background to learn prompt engineering?

A programming background is not mandatory, but comfort with systems, logic, and structured thinking is increasingly important.

How long does it take to become competent?

Basic proficiency can be achieved in weeks. Professional, system-level competence typically takes months of applied practice.

Is prompt engineering likely to be automated away?

While models continue to improve, human oversight remains necessary for system design, constraint definition, and risk management

What type of work do clients actually pay for in prompt engineering?

Clients typically pay for outcomes such as reliable automation, consistent content systems, controlled AI behavior, and reduced operational effort, rather than for prompts alone.